Background of LLMs

The development of language models has been a cornerstone of progress in the field of artificial intelligence. Initially, these models were simplistic, focusing on basic text prediction and analysis based on rule-based algorithms. However, the introduction of machine learning and, more specifically, deep learning techniques, revolutionized their capabilities. The advent of transformer architectures in 2017, through the seminal paper “Attention Is All You Need” by Vaswani et al., marked a significant leap forward. This architecture enabled models to process words in relation to all other words in a sentence, vastly improving the understanding and generation of complex text.

Proprietary models, such as GPT (Generative Pretrained Transformer) by OpenAI, showcased the potential of these advances, delivering unprecedented performance in tasks like text completion, translation, and conversation. However, the proprietary nature of such models limited access to a select few, raising concerns about the equitable distribution of AI benefits.

The response from the AI community was a decisive pivot towards open-source initiatives. These efforts were not just about creating alternatives but also about fostering a culture of transparency, collaboration, and innovation. Open-source LLMs are built on the principle that collective development can accelerate progress, mitigate risks of bias and unfairness, and democratize access to technology.

The Rise of Open-Source LLMs

The rise of open-source LLMs can be attributed to several key factors. The first is the increasing recognition of the limitations and ethical concerns associated with proprietary models. Issues such as data privacy, model transparency, and the monopolization of AI technologies by a few corporations have prompted a search for more inclusive and accessible alternatives.

Another significant factor is the advancement in open-source software and hardware, which has lowered the barriers to entry for developing and training complex models. The availability of high-quality, open-source datasets, along with improvements in computing resources, has made it feasible for independent researchers and smaller organizations to contribute to the field of LLMs.

Community-driven projects have been at the heart of this movement. Platforms like GitHub and Hugging Face have become central hubs for collaboration, allowing developers and researchers from around the world to share their work, build upon others’ achievements, and push the boundaries of what’s possible with LLMs. This collective effort has led to the development of models that not only rival but in some cases, surpass the capabilities of their proprietary counterparts.

Key Open-Source Models

The landscape of open-source LLMs is rich and diverse, with each model bringing its own strengths to the table. Two of the most notable models in this space are LLaMA and Mistral, but they are far from alone. Other projects, such as EleutherAI’s GPT-Neo and GPT-J, have also made significant contributions.

- LLaMA: Developed with the aim of providing a high-quality, versatile language model, LLaMA stands out for its efficiency and adaptability. It offers competitive performance across a range of tasks, from natural language understanding to content generation, making it a valuable resource for researchers and developers alike.

- Mistral: Mistral distinguishes itself by its performance and the openness of its development process. It has been benchmarked against proprietary models and shown to offer comparable, if not superior, results in certain scenarios. This model exemplifies the potential of open-source development to achieve cutting-edge results in AI.

- Other Models: GPT-Neo and GPT-J are examples of community-driven efforts to replicate and extend the capabilities of large-scale transformer models. These models have been pivotal in demonstrating that open collaboration can produce tools that are both powerful and accessible to a wide audience.

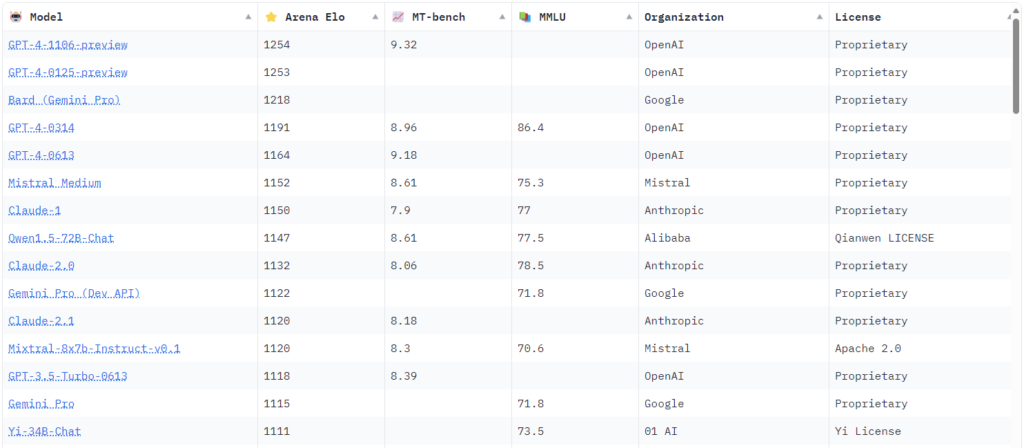

Leaderboard Benchmark

To objectively assess the performance of open-source LLMs, it’s essential to refer to standardized benchmarks. The “Chatbot Arena Leaderboard” on Hugging Face serves as a comprehensive benchmarking platform, comparing models like Mistral against proprietary counterparts.

- Performance Metrics: The leaderboard evaluates models based on various metrics, including accuracy, coherence, and response time. These metrics provide a holistic view of a model’s capabilities, from its ability to understand and generate relevant responses to its efficiency in processing requests.

- Mistral’s Achievements: In this competitive arena, Mistral has demonstrated remarkable performance, matching or even surpassing proprietary models in certain metrics. This is a testament to the quality and potential of open-source models to contribute significantly to the field of AI.

- Implications: The success of Mistral and other open-source models in these benchmarks highlights the viability of collaborative, community-driven approaches to developing high-performance AI technologies. It challenges the notion that only proprietary models can lead the way in innovation and effectiveness.

By examining the key open-source models and their performance on recognized benchmarks, we gain insight into the dynamic and rapidly evolving landscape of AI research and development. The achievements of these models not only underscore the value of open-source initiatives in promoting innovation and accessibility in AI but also hint at a future where the collaborative effort continues to break new ground in technology.

Challenges and Limitations

Despite the promising advancements of open-source LLMs, several challenges and limitations persist, impacting their development and widespread adoption.

- Technical Challenges: Open-source LLMs often require vast amounts of data and computational resources for training, making them less accessible to individuals and organizations with limited resources. Additionally, maintaining model performance while ensuring privacy and security remains a complex challenge.

- Ethical Considerations: Issues such as bias, fairness, and ethical use are magnified in open-source projects due to their public nature and broad accessibility. Ensuring that these models do not perpetuate harmful biases or misinformation requires continuous effort and vigilance from the community.

- Sustainability: The reliance on volunteer contributions and public funding can pose sustainability challenges for open-source LLM projects. Ensuring long-term support and development requires finding models that balance openness with financial viability.

Community and Collaboration

The success of open-source LLMs heavily relies on the strength and engagement of the community. Collaboration across borders and disciplines has been a driving force behind the rapid advancement of these models.

- Platforms for Collaboration: Online platforms like GitHub and Hugging Face have become central to fostering collaboration, allowing for the sharing of code, datasets, and research findings. These platforms also facilitate discussions and feedback, which are crucial for iterative improvement.

- Contributions from Diverse Backgrounds: The open-source model encourages participation from a wide range of contributors, including researchers, hobbyists, and industry professionals. This diversity brings a wealth of perspectives and expertise, driving innovation and addressing challenges from multiple angles.

Future Prospects

The future of open-source LLMs looks promising, with several trends indicating continued growth and impact.

- Technological Advancements: Ongoing research and development are expected to address current limitations, leading to more efficient, accurate, and ethical models. Techniques like few-shot learning and model distillation offer paths to reducing resource requirements and improving accessibility.

- Wider Adoption and Impact: As these models become more capable and user-friendly, their adoption across industries is likely to increase, driving innovation in fields ranging from healthcare to education to entertainment.

- Community Growth and Governance: The open-source community is likely to expand, bringing in more contributors and stakeholders. This growth will necessitate the development of governance models that ensure the ethical and sustainable advancement of LLM technologies.

Conclusion

Open-source LLMs represent a significant shift in the AI landscape, democratizing access to cutting-edge technology and fostering a culture of collaboration and innovation. While challenges remain, the community-driven approach has proven to be a powerful model for advancing AI research and development. The future of open-source LLMs is not just about technological breakthroughs but also about building an inclusive, ethical, and sustainable ecosystem for AI.